Table of contents

Table of contents

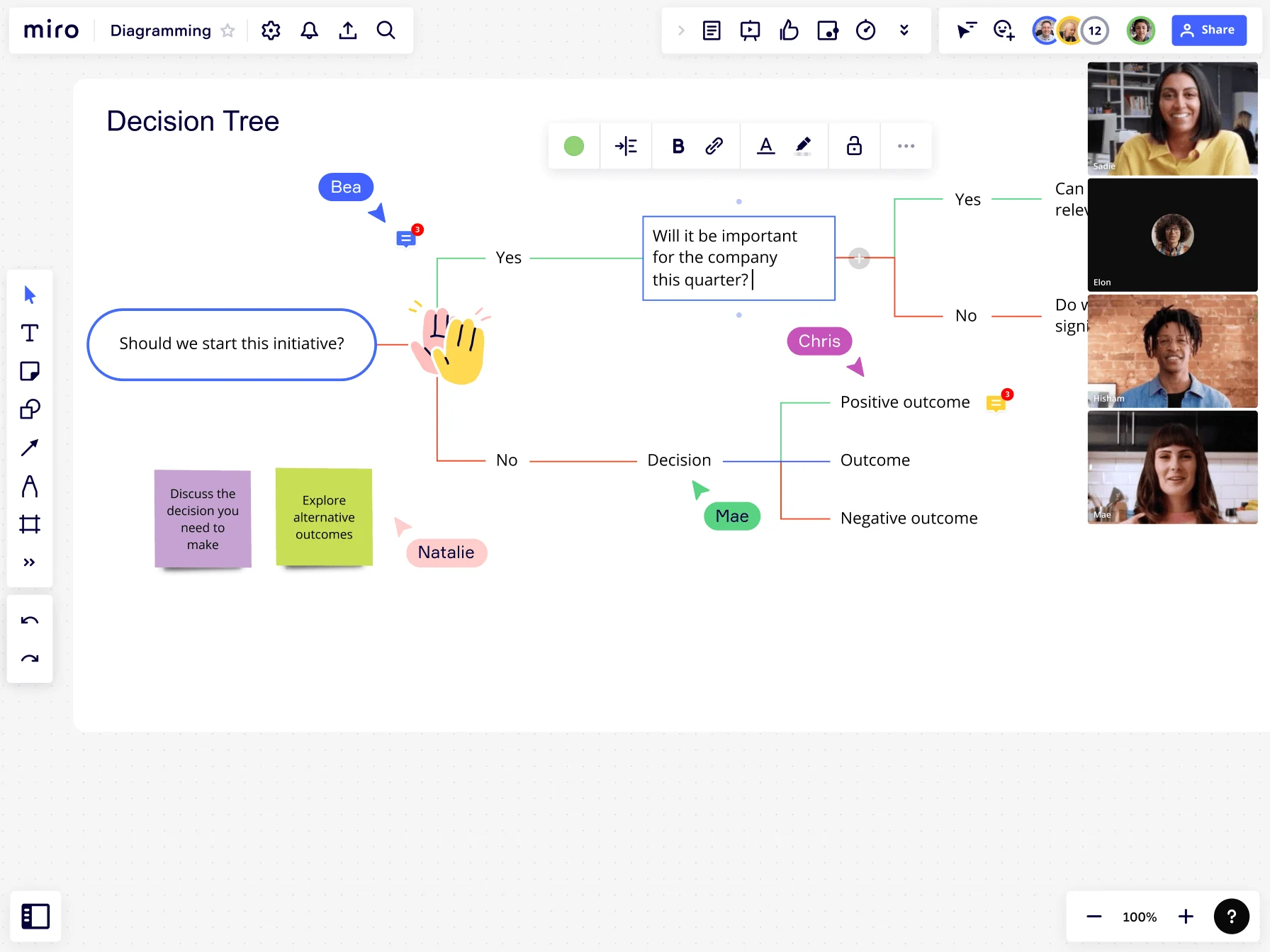

What is a decision tree?

A guide to decision trees

Decision trees are simple yet powerful tools that can help you and your team solve a range of problems — from everyday challenges to complex issues. Our guide covers everything you need to know, including what a decision tree is, how to make one, examples, and more.

What is a decision tree?

A decision tree is a visual tool that helps businesses and individuals make choices by visualizing possible outcomes and consequences. Creating a decision tree allows users to weigh different opportunities and map a pathway to the desired result. Decision trees take their inspiration from a tree. They usually start with a singular node from which different branches emerge. Each branch will lead to another node representing a unique decision or opportunity stemming from the original question node. The branches will usually be the action or answer to a question, which, if taken, will lead to the next node. This format allows you to map out how your decisions and actions will lead to different outcomes in the future. Decision trees are useful for businesses with multiple opportunities. It helps them decide which ones to prioritize and which to leave behind. Visualizing the outcomes of your decisions can help teams make informed strategic decisions, improve long-term planning, and visualize long-term planning clearly and concisely. But even a simple decision tree diagram can help you make decisions in everyday life.

Decision tree symbols and shapes

Most decision trees use a set of standard shapes and symbols. This makes it easier to share them between different groups and for everyone to understand. Here are some of the common decision tree symbols:

Decision node (usually squares)

The squares in the diagram indicate a decision that needs to be made

Chance nodes (usually circles)

A chance node is a symbol that indicates a decision with multiple uncertain outcomes

End nodes (usually triangles)

The triangles in the diagram indicate the outcome

Branches (lines)

Each branch in the decision tree indicates the pathway to a possible outcome or action

How to make a decision tree

Miro’s ready-made decision tree templates is a fantastic digital tool to explore, plan, and predict the outcomes of your decisions. If you’re new to this topic, this decision tree step-by-step guide will help you create one.

1. Define the question

Before creating your decision tree, you need to understand the question you are asking. Every decision or choice you make must start with a question in the opening node. Take a look at the simple decision tree diagram example below. The question “Should we start this initiative?” starts the diagram.

2. Add branches

Once you’ve defined your question and added it to the opening node, it’s time to consider all the possible actions you can take to answer that question. Each possibility or answer must be represented by its branch. The example above shows a closed question, which means there are only two initial branches: yes and no.

3. Add leaves

At the end of each branch, you need to add a leaf/node. This will be a prediction of the outcome of the action that you took. Ask yourself a question in this style: “What will happen if I take branch A?” The answer to that question should be a statement, and you should add that statement to the leaf at the end of the branch. If the opening question takes multiple questions to reach the statement, you will need to add more branches.

4. Terminate branches

Once your branches have no more questions or possible actions, it’s time for you to close off the decision tree with the final triangular nodes. The statement within this node will be the predicted outcome based on the branch you decided to follow.

Examples of decision tree diagrams

Let’s take a look at some examples of decision trees to better understand how they work:

Simple decision tree

This decision tree example refers to the diagram in its simplest form. As the name implies, decision trees are based on the characteristics of a tree, with branches representing the various decisions you could make to solve an overarching problem. In other words, a simple decision tree is designed to help you explore a series of if-then statements until you reach a final decision. Though anyone can benefit from a simple decision tree, it’s ideal for beginners who’ve never worked with these types of diagrams before.

Classification and Regression Trees (CART)

CART is a type of machine learning algorithm that uses decision trees to sort tasks into groups (classification) and make predictions (regression). Whether it's sorting fruits by color or predicting someone's height based on age, CART trees make these decisions by splitting data into groups — creating a tree-like structure for precise classification or prediction.

Random forests

Just as forests are made up of multiple trees, the random forest algorithm leverages multiple decision trees and combines their predictions. By gathering insights from various trees, it increases the chances of an accurate prediction. Some tasks they could be helpful in include medical diagnoses, financial credit scoring, and product recommendations on e-commerce sites.

Advantages and disadvantages of decision trees

Like all decision-making diagrams, each has pros and cons, and not every tool is right for the job. Considering the benefits and limitations of decision trees will help clarify when and why to use one.

Advantages

Here are five benefits of using decision trees worth keeping in mind:

Versatile

Decision trees are highly versatile tools that individuals, teams, or companies can use. You can use them for mapping out simple, everyday decisions, as in the example above. Or you can use them to visualize multi-layered decisions, complex data sets, and machine learning algorithms.

Easy to interpret

One of the most significant advantages of decision trees is that they are easy to understand and analyze. Even if a decision tree depicts a complex decision, the graphic, simple layout makes it intuitive for all team members to read.

Can handle any type of data

Decision trees can display a wide variety of numerical or categorical data. This makes decision trees helpful in various contexts, from machine learning to complex decision-making.

Easy to edit and update

The nature of decision trees means that they can easily be edited and updated, for instance, if an additional option is added to the equation. They are dynamic instead of static tools that you can update, which is crucial for teams needing to adapt to change and stay current. With Miro’s online decision tree maker, you can easily update any data point and edit your diagram to get back on track.

Help you consider the consequences of your decisions

Decision trees allow you to consider the outcomes and consequences of different choices carefully. By exploring all possible scenarios, you can assess which course of action is most beneficial before deciding.

Disadvantages

Here are three limitations to using decision trees to keep in mind:

They are unstable

Even though decision trees are easily updatable and changeable, a slight change in certain decision trees can cause instability. This can lead to significant changes in their structure.

They can be inaccurate

One of the inherent risks of relying too heavily on a decision tree is that it is almost impossible to predict the future and consequences of real-life decisions. In this way, decision trees may be slightly inaccurate.

Complex calculations may not be suitable

Since decision trees are simple diagrams and can be used for complex scenarios, they may not be ideal for complex calculations with hundreds of variables. They have the potential to offer a false sense of security when making complicated decisions with major ramifications.

When to use a decision tree

The beauty of decision trees is their flexibility and robustness. This makes them popular tools in many different professional and personal contexts. Here’s how to apply decision trees in a few different scenarios:

Everyday decision-making

Anyone can use a decision tree to help them make everyday decisions. They are flexible enough to cover and display complex or straightforward decisions. Creating a decision tree is a valuable exercise that encourages deep thinking and consequence consideration with visual cues. The diagrams can be helpful to anyone who wants to ponder the effects of choices and opportunities that arise in everyday life.

Assessing business growth opportunities

Decision trees can benefit companies looking to expand their business and determine a long-term strategic plan. They need to predict the outcomes of their decisions before investing time and money into an action plan. Part of running a business is about taking calculated risks, and a decision tree will allow you to take risks while being savvy and safe. Whether this means buying and selling stock, taking on investors, or implementing a new marketing campaign, business owners must carefully assess the risks and opportunities. Try Miro's action templates to set clear objectives.

Decision trees in machine learning

Decision trees have become increasingly popular in machine learning because they provide a way to present algorithms with conditional control statements. In machine learning, decision analysis is commonly used in data mining to reach a particular goal. Decision trees are also used in supervised machine learning. This subcategory of machine learning is where the input is explained in detail, and the corresponding output is used as training data. The data going through this decision tree is continuously split into specific parameters.

Decision trees for data classification

Decision trees are used as classification and regression models in coding languages like Python and Javascript. They help break down a data set into smaller subsets, making it easier to sort and classify long data lists into separate containers. In this context, each decision tree branch represents an outcome, and the path from the leaf to the root represents rules for classification. Professionals in the information technology industry use classification decision trees to streamline coding processes and save time.

Influence diagram versus decision tree

An influence diagram is closely related to decision trees, even more so than a fishbone analysis. The main difference between a decision tree and an influence diagram is that an influence diagram shows the conditional relationships and dependencies of different variables. A decision tree also offers more detail about each possible choice and outcome. The number of nodes increases exponentially in a decision tree, whereas an influence diagram gives a more compact representation of the possible decisions. Because they give greater detail, a decision tree can become more complicated and messy than an influence diagram. You can use an influence diagram to summarize the information shown in a decision tree. In this way, influence diagrams and decision trees are complementary techniques that can present the same data.

Fishbone diagram versus decision tree

Although fishbone diagrams are similar to decision trees, they have some important differences. While decision trees are decision-making tools, fishbone diagrams are “cause and effect tools.” Teams use fishbone diagrams to identify defects, variations, or particular successes in a business process. In this sense, fishbone diagrams look backward more than forwards. They help drill down to the potential root causes of a problem. Decision trees, on the other hand, are more forward-looking. They try to predict the outcomes and consequences of a process or decision. While they are both presented similarly, they represent different things.