Data is the backbone of modern organizations. Teams use data to gain insights about customer behavior, audit and optimize operations, forecast future events, and uncover hidden trends that can lead to growth.

But while data is critical, many organizations still struggle to harness it effectively. For example, their datasets may be expansive, disconnected, or siloed across numerous platforms, so aggregating it and drawing tangible insights to drive positive change can be really difficult.

Luckily, there are tools that can help, including Azure Data Factory. Below, we’ll dive into ADF, its benefits, and how you can use it at your organization to turn your data into action.

What is Azure Data Factory (ADF)?

Azure Data Factory (ADF) is a cloud-based data integration service from Microsoft that enables businesses to create, manage, and automate data pipelines.

ADF helps businesses effectively move, transform, and manage their data between different systems, including cloud or on-premise systems.

By streamlining and automating these processes, ADF ensures that data is always-up-to-date and ready for analysis whenever it’s needed. This is essential for modern, dispersed organizations that rely heavily on data to make fast and strategic decisions for their business.

10 benefits of Azure Data Factory

At its core, Azure Data Factory can help businesses streamline their data processes and improve efficiency, making it a powerful tool for automating data integration and driving better business outcomes.

Here are 10 benefits of ADF to consider:

- Automates data integration. ADF handles moving and transforming data between systems, which saves time and reduces the need for manual data processes.

- Reduces IT overhead.. ADF is cloud-based, so you don’t have to worry about setting up or maintaining servers. This reduces IT overhead and operational costs.

- Works with many data sources. Whether your data is stored in the cloud or on-premises, ADF integrates with a wide range of platforms, making it easier to manage all your data in one place.

- Easy to use for all teams. With a simple drag-and-drop interface, even non-technical users can build data workflows, while technical users can use code if needed.

- Offers real-time and scheduled automation. ADF lets you schedule data tasks or trigger them automatically based on events, ensuring your data is always up-to-date.

- Scales as you grow. ADF can handle increasing data volumes as your business grows, and you only pay for what you use, which can help you control costs.

- Improves decision-making. By automating data integration and processing, ADF ensures real-time data is always available, enabling faster, data-driven decisions.

- Supports advanced analytics. ADF easily integrates with machine learning and analytics platforms, helping businesses leverage their data for insights and predictive modeling.

- Ensures data security. Built-in security features and compliance tools protect your data and ensure your company’s data management practices meet regulatory standards, which is crucial for industries like finance and healthcare.

- Reduces manual errors. By automating complex data workflows, ADF minimizes the risk of human errors that can occur during manual data processing, which can lead to more accurate results.

Now let’s talk about how it works.

How ADF works: the basics

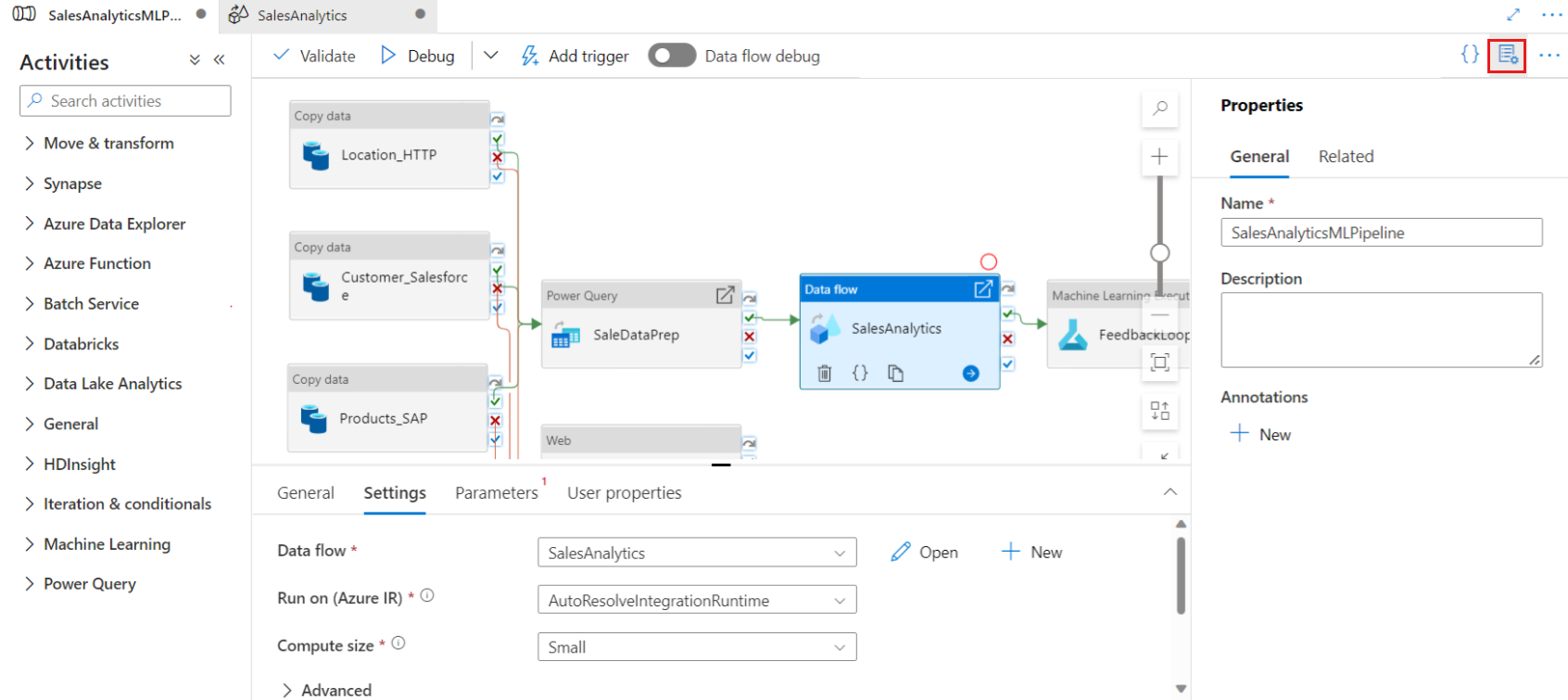

ADF works by facilitating data movement and transformation through five key phases:

- Ingest

- Control flow

- Data flow

- Schedule

- Monitor

Here’s an example to help illustrate what happens in each phase.

A large retail company that operates across multiple global regions needs to clean and centralize their sales data from FY2024. They’ll need this data to perform real-time and async analysis of their sales performance to help with strategic planning and budgeting for the coming fiscal year.

Here’s how ADF can help.

- Ingest. First, ADF connects to multiple sources like sales databases, cloud storage, and APIs from regional stores, gathering data into a central repository for processing.

- Control flow. Next, ADF orchestrates a sequence of actions, ensuring data from all regions is collected first, then processed for consistency, with tasks automatically triggered as each step completes. These actions include data collection, transformation (like cleaning or aggregating data), applying conditional logic to handle errors, and integrating with external services like analytics or machine learning platforms.

- Data flow. Once these actions are complete, ADF then cleans and transforms the sales data by removing duplicates, aggregating totals, and formatting it all for reporting — without the need for extensive coding.

- Schedule. ADF pipelines can then be set to run daily (or at other specified time intervals) to ensure that sales data from each region is updated automatically and available for decision-making as needed.

- Monitor. Lastly, ADF provides real-time monitoring of the pipeline, allowing the company to track performance, identify and resolve issues, and ensure that data processing remains smooth and efficient.

By following this workflow, the retail company can integrate and manage data across all of their locations seamlessly, ensuring timely and reliable insights into their sales performance.

How to start using Azure Data Factory

The first step in using Azure Data Factory is to design your data pipelines using the ADF designer. This is a visual tool that makes it easy to create pipelines that define how data moves and transforms between different sources and destinations.

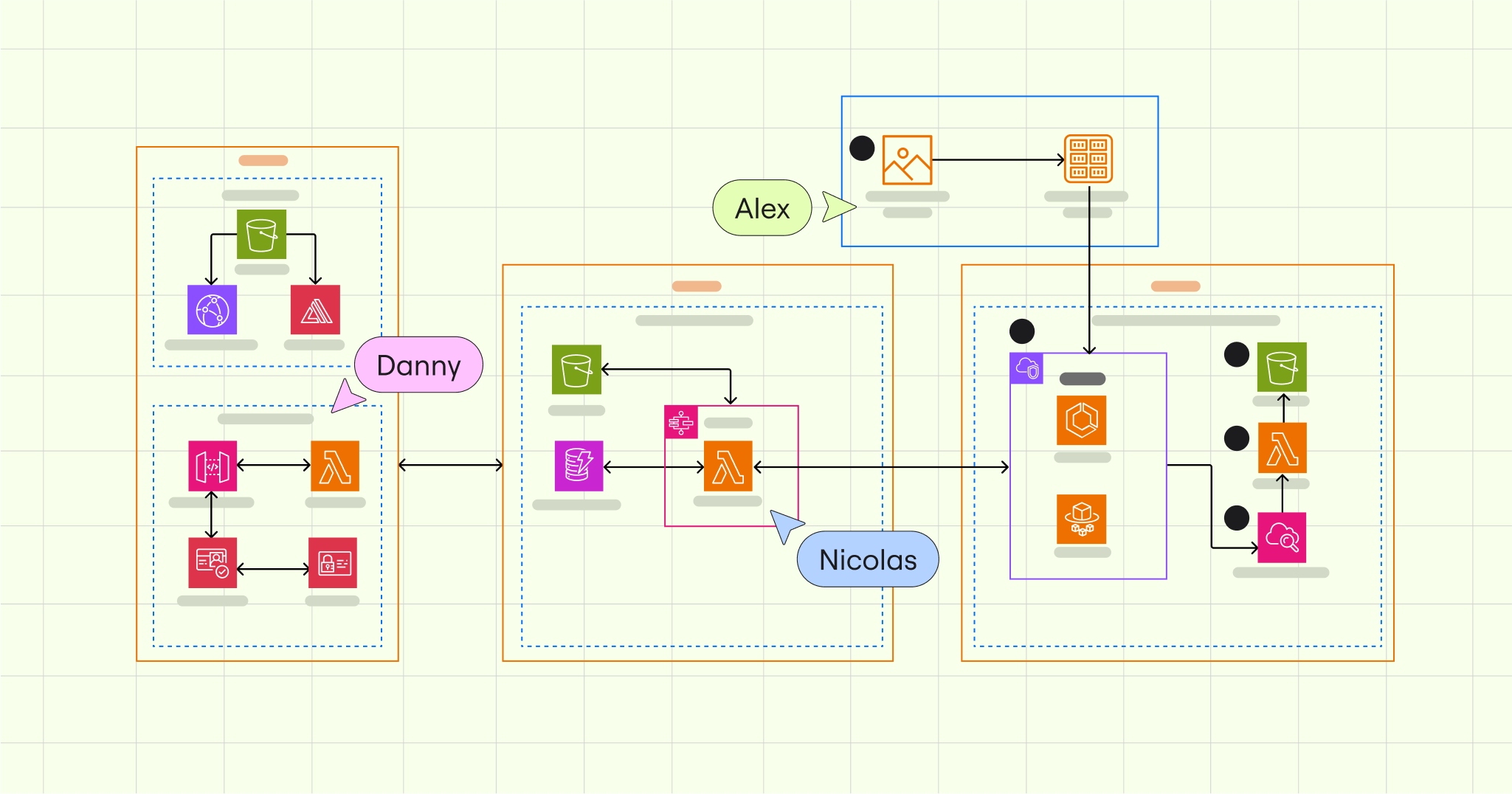

Next, use a visual diagramming tool like Miro’s Azure architecture diagram template to map your data flow framework. This template helps you visually organize simple to complex data workflows, ensuring everything is clearly planned before implementation.

As a bonus, Miro templates are fully collaborative and shareable, meaning that your team and key stakeholders can collaborate remotely on your planning documents either in real-time or async.

Once your framework is in place, set up linked services to connect ADF to your data sources, such as SQL databases or cloud storage.

Next, define datasets that detail where your data is coming from and where it’s going. Datasets in ADF represent the specific data structures (like tables, files, or blobs) that the platform will read from or write to during pipeline execution, helping ensure that the correct data is used and processed in each step of the workflow.

Finally, create data flow — such as filtering out unnecessary information, cleaning up inconsistencies, or aggregating data into summaries — to transform your data so that it’s ready for analysis.

Connect, understand, and make the most of your data

Creating and storing data isn’t the problem for modern organizations. If it were, studies wouldn’t project that the world will generate roughly 180 zettabytes of data in 2025 (up from 64.2 zettabytes in 2020).

The problem is connecting data. ADF is an essential tool for organizations with expansive, but disconnected data pools. It helps to connect, aggregate, and standardize data, so you can derive meaningful insights to drive your business forward.