As an Amazon Web Services (AWS) Ambassador candidate and Solutions Architect at Miro, I spend a lot of time thinking about how AI should fit into architecture workflows.

In this guide, I’ll share what I’ve learned about using AI to enhance, not replace, the human expertise that makes or breaks cloud infrastructure decisions. I’ll walk you through how I use Miro’s AI capabilities for AWS diagramming, show you the custom Sidekicks I’ve built for architecture reviews, and explain why I think the visual canvas is the right interface for team-AI collaboration in infrastructure work.

A quick note on agentic AI

Before we dive in, I should be upfront: I don’t believe in fully agentic AI for software engineering — at least, not yet. Here’s why.

You’re still responsible for what AI produces

If AI generates code that takes down your service, “the AI did it” excuse doesn’t fly. That’s firmly in “the dog ate my homework” territory. Until AI companies provide full indemnity against losses from generated code, we need to stand behind every AI-assisted decision. And though AWS’s uncapped IP indemnity for Nova models is an interesting step, a company offering full indemnity for code is quite brave.

Over-reliance on AI dulls critical thinking

Remember math class? Even with calculators available, you still had to show your work (carry the one, borrow the ten). The lesson wasn’t about getting the right answer — it was about building the critical thinking skills you’d need later.

In engineering, the most experienced leaders have always trained the next generation by letting them take the scenic route. Sure, the senior engineer could do it faster, but letting someone less experienced work through the problem is better for the industry and usually better for the company. If AI does all the work, junior engineers miss that critical thinking practice and even senior engineers lose opportunities to keep their skills sharp. We have to find a compromise, or code will become indecipherable hieroglyphics that nobody understands (a bit like COBOL).

We have yet to unlock the full potential of AI workflows

Gartner recently moved AI into the “Trough of Disillusionment” phase of the hype cycle, and plenty of CEOs are getting impatient for the returns they were promised. OpenAI has been consistent from the start about the solution: Human-in-the-Loop (HITL). Their safety best practices explicitly recommend human review for all outputs, especially in high-stakes domains and code generation.

The next stage of AI effectiveness will be in the team-AI interface. And I genuinely think Miro offers that perfect mix: AI capabilities combined with team-led checks and balances, curation, and decision-making, all on a shared canvas where teams can collaborate.

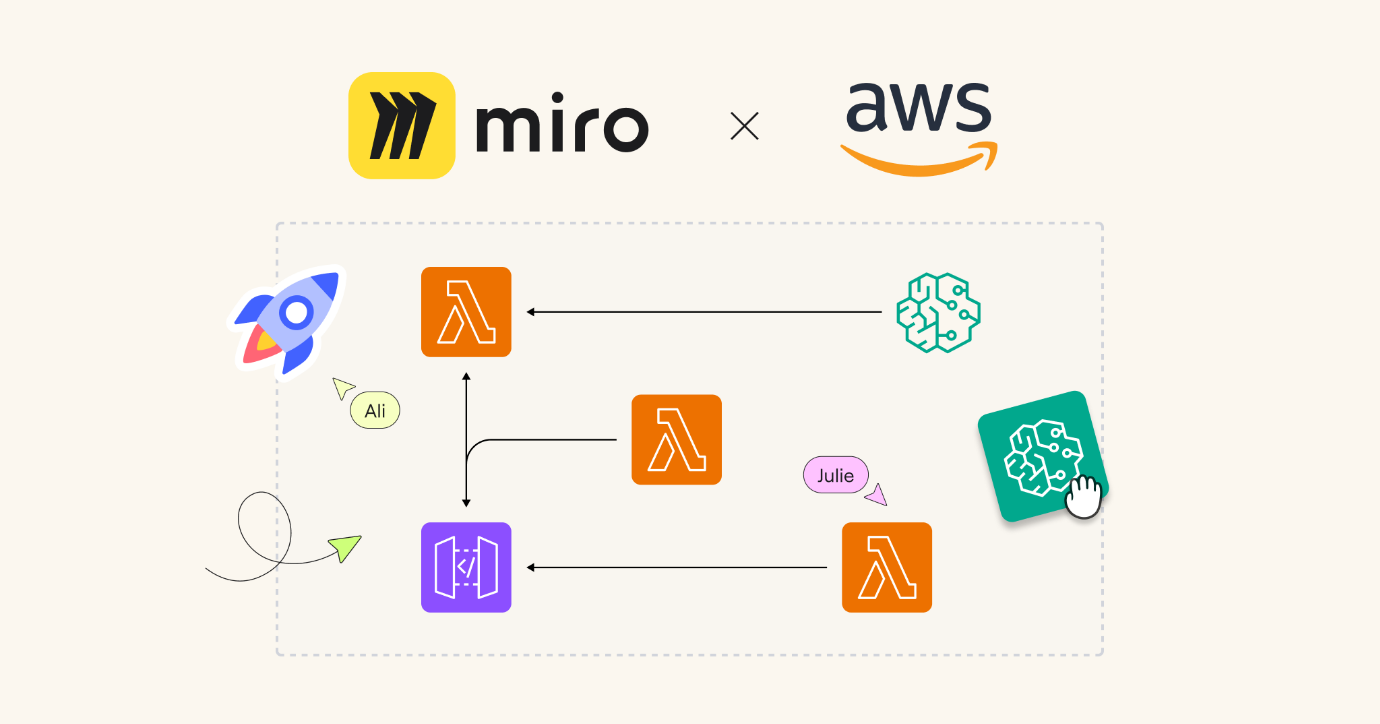

Miro’s AI capabilities for AWS work

Miro brings AI directly into the visual workspace where architecture design already happens. This matters because it means AI augments team collaboration, not just individual productivity. Here’s how it works:

Sidekicks

Sidekicks are conversational AI agents built for specific use cases. When you create your own, you define the “Instructions” (think of this as the system prompt) and upload files as “Knowledge Sources” (essentially RAG or Retrieval Augmented Generation). You can choose between Amazon Bedrock, OpenAI, and Gemini models for the chat interface, document creation, and image generation.

There are two Sidekicks by Miro available for AWS workflows (Register for the Beta here!):

- AWS Solution Architecture Sidekick: This one examines your AWS diagrams in Miro, asks questions about your goals around availability and resiliency, then recommends improvement packages. Each package includes the services you should add, why you should add them, and where on the diagram to place them (it’ll even recommend layers). The Sidekick tends to put its output in the chat rather than creating new board elements.

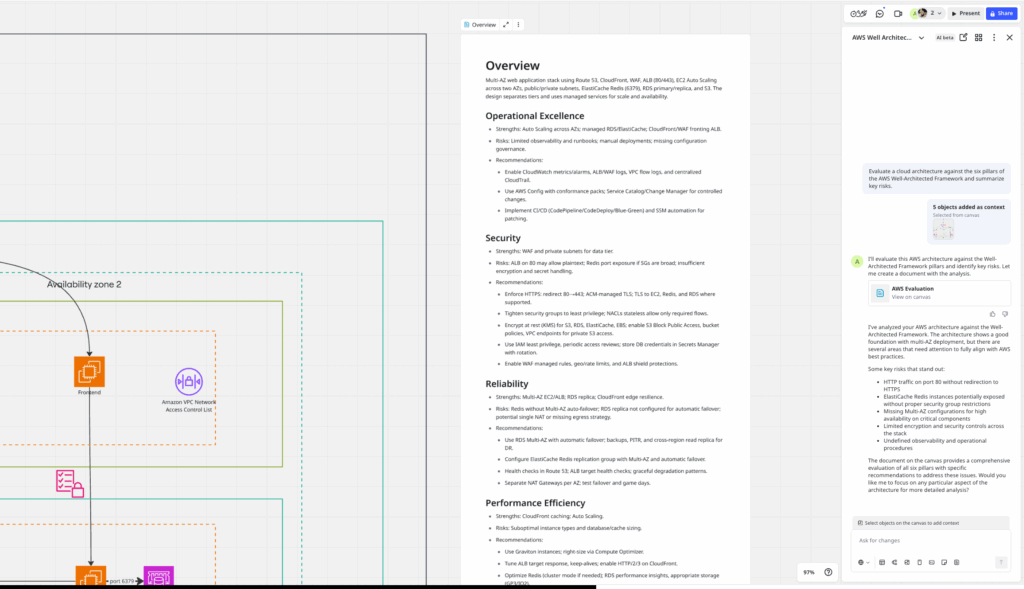

- AWS Well-Architected Sidekick: This Sidekick reviews your diagram through the lens of the Well-Architected Framework‘s six pillars: Operational Excellence, Security, Reliability, Performance Efficiency, Cost Optimization, and Sustainability. It’s biased toward producing a document directly on the Miro board — basically a report of what could be improved from a Well-Architected perspective.

Flows

The typical LLM experience has been single-threaded: you chat with the model, one message at a time. But what if you discover a sequence of LLM requests you want to repeat? That’s where Flows come in.

Flows is essentially node-based AI programming where you can link multiple models together. You’re breaking down the single-thread AI workflow and turning it into a tapestry of different models and system prompts.

For example, you could create a Flow that takes an AWS diagram and produces both CDK and Terraform bootstrap templates in parallel. Same input, two different outputs, executed automatically.

AWS diagramming in Miro

Let me show you how diagramming actually works in Miro. There are two approaches:

Manual diagramming with shapes

Click the plus symbol in the left sidebar and search for “diagramming shapes.” By default, the AWS shapes should be enabled, but if not, go to “Manage Shapes” and check the box for AWS. I like to temporarily disable other shape libraries to make it easier to select only AWS shapes.

AWS Cloud View App

If you have existing cloud architecture you want to bring onto the board, you can use the AWS Cloud View app. It connects to your AWS account with a cross-account role, and once that’s set up, you can import your AWS resources and filter by region, resource type, and tags.

Example: Scaling a simple e-commerce app

Let’s work through a realistic scenario. Imagine we’ve created a successful e-commerce website in one region, and now we want to scale it globally.

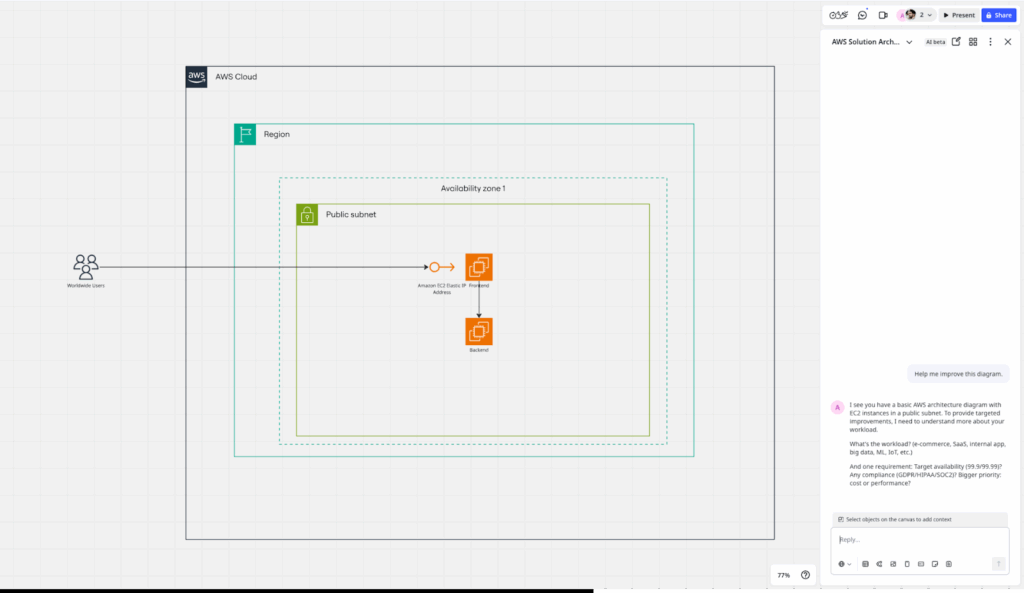

If you’ve been in cloud architecture for a while, you’ll recognize this design isn’t a good solution for scaling to a global user base. It’s not highly available, latency will be an issue, and we’re not taking advantage of the services AWS provides to help us scale efficiently. We can see that we have users connecting directly to an Amazon Elastic Compute Cloud (Amazon EC2) machine via an elastic ip, both the frontend and backend are in a public subnet and everything is in one availability zone in one region.

Using the AWS Sidekicks to improve the design

I’ll select the AWS Solution Architecture Sidekick and start with the prompt: “Help me improve an AWS architecture.”

The Sidekick asks for context first:

- What’s the workload (e-commerce, SaaS, internal app, big data, ML, IoT, etc.)?

- Could you share a requirement: Target availability (99.9/99.99)?

- Any compliance needs (GDPR/HIPAA/SOC2)?

- What’s the bigger priority: cost or performance?

This questioning is important. Not every AWS architecture needs to be a global, highly-available, ultra-performant Kubernetes-hosted super app. If it’s an internal app with low availability requirements, sometimes quick and simple is fine.

For this walkthrough, I’m going to push the requirements to be a bit aggressive so we can show what the Sidekick can really do. Let’s say this is an e-commerce site. It must be highly available, we need to keep GDPR in mind, and cost is the number-one priority.

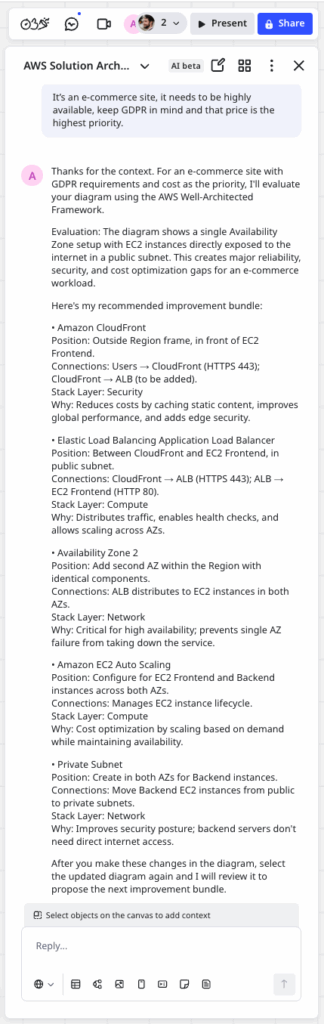

Right away, the Sidekick flags major reliability and security gaps for a customer-facing app: we’re in a single Availability Zone and our EC2 frontend is directly exposed to the internet. That’s both risky and expensive in the long run.

The first recommendation is to put Amazon CloudFront in front of everything. This cuts global latency and shields the site by caching static content—great for user experience and great for cost.

Next, it suggests adding an AWS Application Load Balancer (ALB). The ALB lets us distribute traffic across multiple EC2 instances and, importantly, across multiple Availability Zones. That unlocks health checks, graceful failover, and horizontal scaling.

Because traffic for retail can spike hard and drop just as fast, the Sidekick adds an EC2 Auto Scaling Group for both frontend and backend tiers. That way we scale up when demand arrives and scale down when it doesn’t—keeping performance high and costs under control.

It then recommends introducing a second Availability Zone. If the original AZ has an incident, we can keep serving customers from the other AZ. We mirror the EC2 capacity there so anyone reading the diagram can see that cross-AZ resilience is actually implemented, not just implied.

Finally, for security and GDPR hygiene, it moves backend instances into private subnets and keeps only the ALB public. That tightens our exposure, simplifies network policy, and helps us meet “privacy by design” expectations without adding unnecessary spend.

We’ll implement this bundle first—CloudFront, ALB, Auto Scaling, a second AZ, and private subnets—then ask the Sidekick for the next iteration (for example, WAF, centralized logging, and encryption controls) once these foundations are in place.

After implementing the recommended changes:

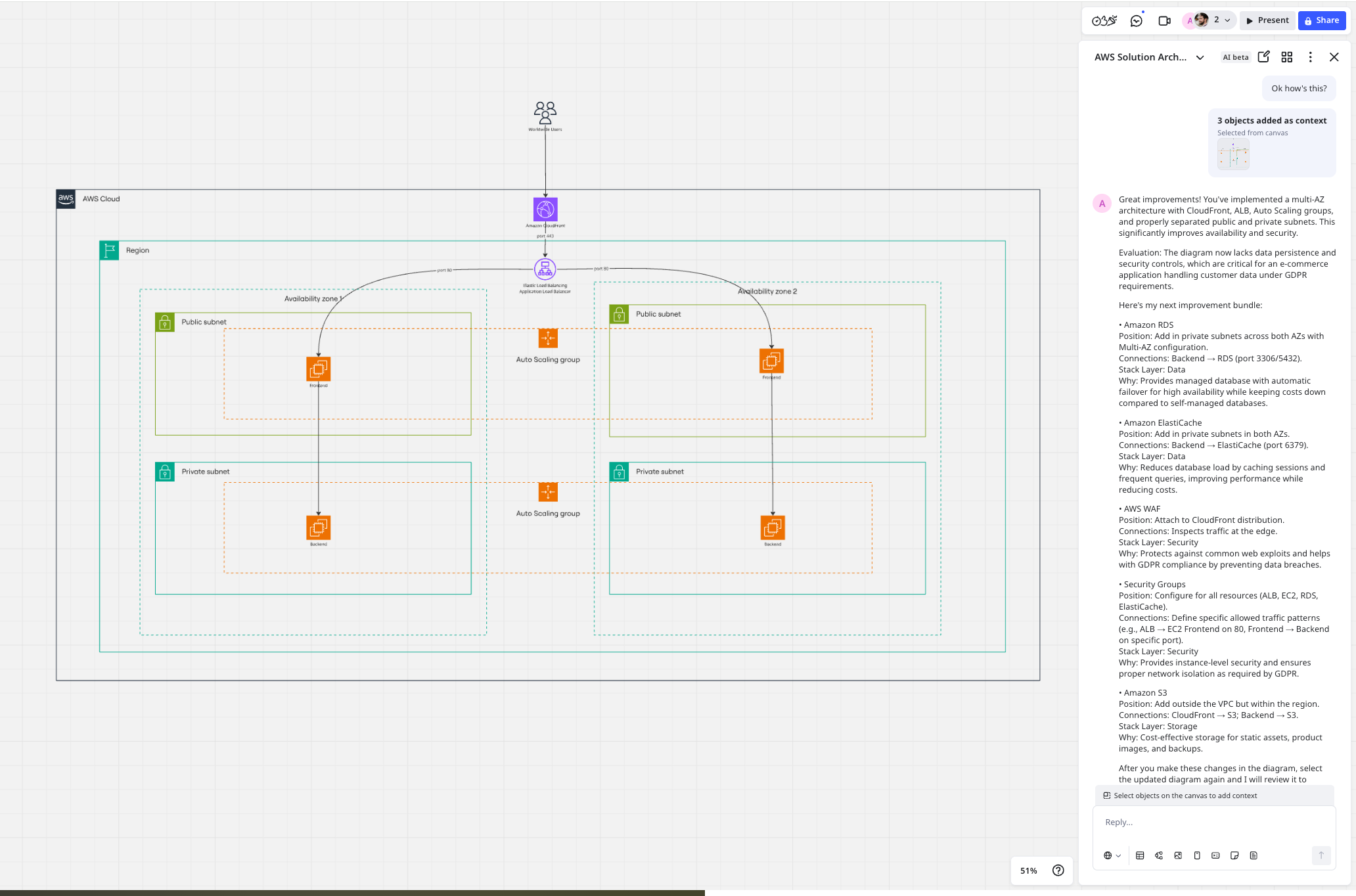

Very quickly, we’ve gone from a single-availability-zone, single-region app to a much better design. Now we’ve implemented the changes we can ask for feedback again from our Sidekick. It recognises the changes we’ve made and now suggests another improvement package.

After a few iterations, the Sidekick will say that we’ve improved our diagram a lot and then start to be biased towards optional improvements and you can decide yourself when you’d like to stop.

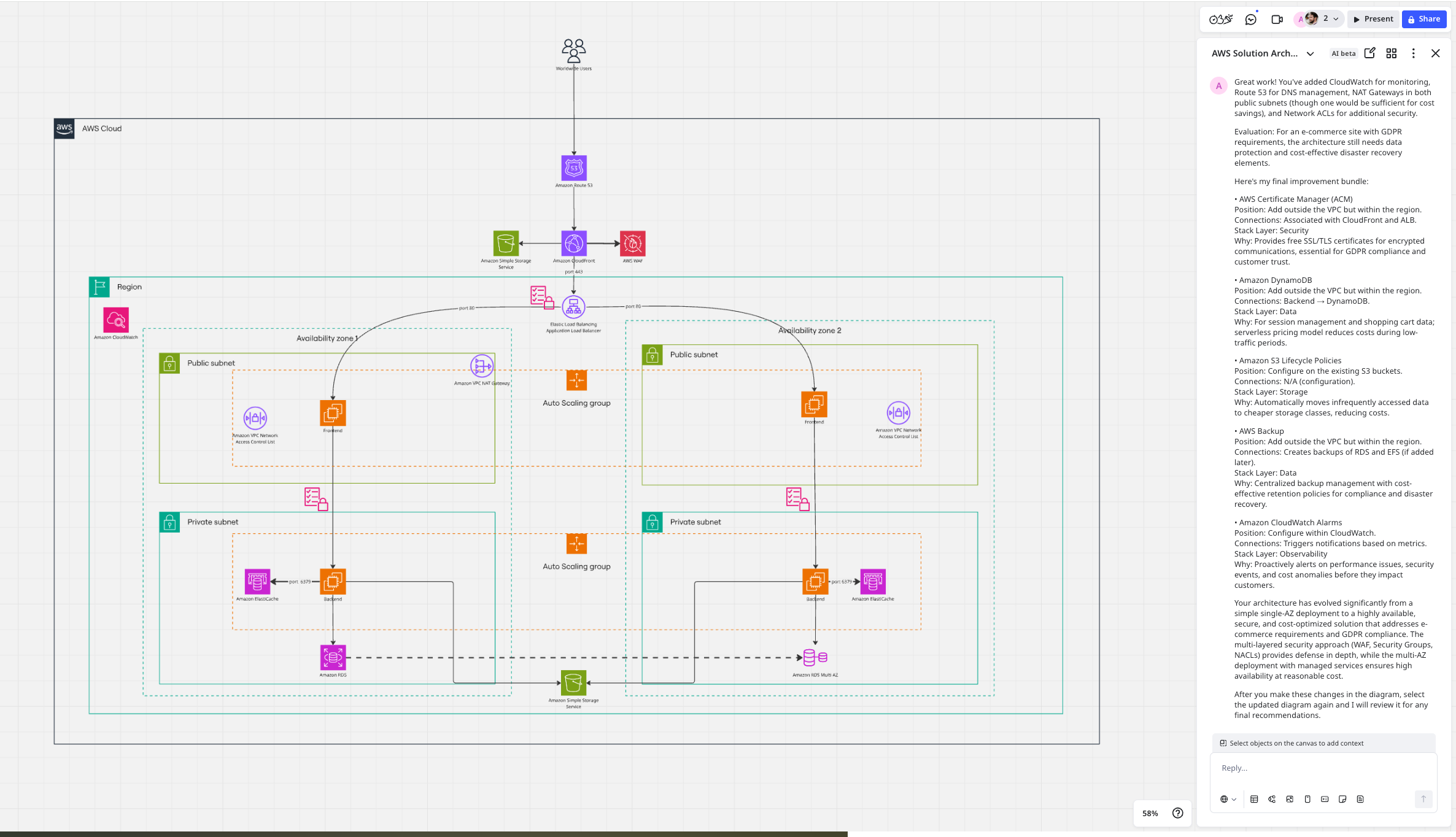

We’ll end up with an architecture like below that meets our initial requirements.

So as you can see we’ve gone from an architecture that is not suitable at all for our use case to a really detailed architecture that employs all of AWS’s best practices.

Now let’s open the AWS Well-Architected Sidekick and get its assessment. This is typically a more thorough review with absolute best practices in mind.

Well Architected goes into much more detail about the absolute best AWS practices for creating a well architected solution. It assesses the design under the 6 pillars of the Well Architected Framework, which are Operational Excellence, Security, Reliability, Performance Efficiency, Cost Optimization, and Sustainability. Usually you would go through a well architected questionnaire assessment with AWS or a partner; however, if you want to take a more conversational approach, the Well Architected Sidekick will happily give you pointers and produce a report of recommendations with the framework in mind.

Why this approach works

So very quickly, we can diagram, improve our AWS architecture, and validate it against best practices — all in one place. Previously, you’d use multiple tools; copy and paste text and screenshots between them; and struggle to invite your non-AI-savvy colleagues and clients to discuss designs together.

With Miro, the canvas becomes the shared space where AI assistance and team expertise converge. Your entire team can see the architecture, understand the AI’s recommendations, and make decisions together. That’s the interface that makes AI effective for infrastructure work.

This is the first post in a series exploring Miro’s AI-powered workflows. Future posts will provide practical guidance for integrating AI into your cloud architecture practice.